|

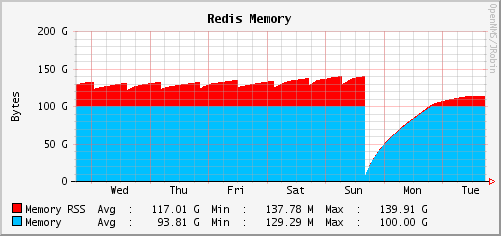

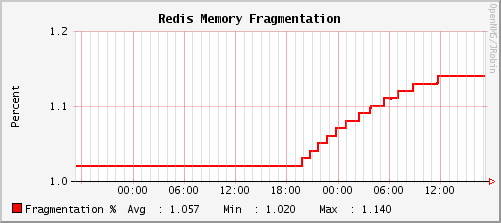

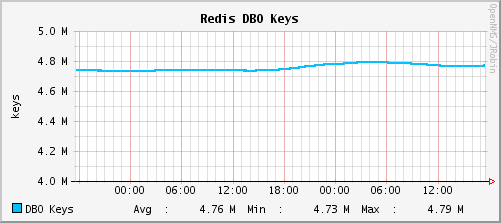

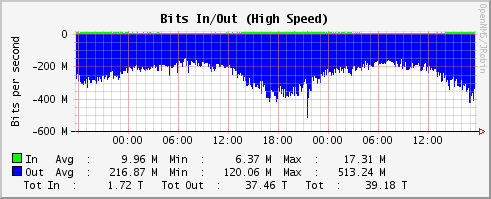

DISCLAIMER: This isn't a "bash redis" post. We use redis as a non-persistent key/value store here at Weebly and are happy with how it performs in that function. I'm thankful for all of the work Salvatore and the community have done into making redis a great key/value store. This post solely addresses using redis as a LRU cache replacement for memcached. Ever since Danga Interactive's/LiveJournal's memcached burst on to the Internet scene, it has become the de facto general purpose caching application for people running larger scale Internet sites (or anyone just wanting to cache expensive operations). And why not? It's a pretty good program that's well written and has extensive library support. The major drawbacks to memcached are its slab allocator memory model and maximum object size at startup. Let's take a look at those. The slab allocator is one way to carve up a finite amount of memory space. You tell memcached how much memory you want to use, and it carves up the memory into differing sized chunks or slabs. For example: you'll get 1000 1KB slabs, 500 2KB slabs, 256 512KB slabs, etc. Those aren't actual numbers, but you get the idea. It goes all the way up to the maximum object size. The maximum object size is determined by the config file at startup; it cannot be resized on the fly. You have a little bit of control over how the slab allocation works (starting size and stepping of the slabs), but nothing really specific. It takes a while to tune the slab allocations to your use case. So with lots of tuning and restarting your caches required (thus emptying the cache), is there anything better out there? Well, we thought redis would be better...but it's not. Redis doesn't use a slab allocator. Sounds great! Right? On paper it did. Let's take a look at the blog post written by redis' author on configuring redis as a LRU cache. He basically says, just set the maxmemory and eviction values, and redis will work as a LRU cache. We tried that. The results were mixed. The crux of the problem is this: No matter what you do and what you configure, redis' memory usage is completely unbounded and you will eventually run out of memory. Let's back up a few steps and examine how to size memory values in memcached. In memcached, you roughly take 1GB (or so) less than installed RAM and use that as your cache size. 72GB of RAM? 71GB cache. 144GB of RAM? 143GB cache. That's basically it. The slab allocator will break up your cache into slabs and that's what you get. Run out of 512KB slabs but have lots of 2MB slabs when you want to write a 500KB object? Too bad. A 512KB object will be evicted even though you have "free space." Sounds lame. Maybe redis does it better? In redis you set a "maxmemory" value. You're told this is the maximum size of the cache. That's true; it is the maximum size of the cache. However, it's not the maximum amount of memory that redis will use to store the cache. Redis allocates memory for objects on the fly up until its maxmemory value. After that, redis will "remove" an object from memory and insert the new object. The devil is in the details. Redis can't guarantee that the new value is inserted into old object's memory address space or even that it will completely fill the free space. Over time, this leads to gaps in the memory space, or what's known as memory fragmentation. If you were to logically look at the memory used for redis, there would be holes or gaps without objects. If you were to look at memcached, it would be a single, solid chunk. What's wrong with fragmentation? If you don't have something to periodically clean up the space and optimize the allocated memory, fragmentation will get so bad that you'll eventually run out of physical memory. Sure, your sum of cache objects will be limited to the value of maxmemory, but you might be using twice that amount to store it in RAM. Sounds like a problem with redis, right? Surely they would want to fix this? Nah. Not really. There have been a few posts (some by me) mentioning this on the redis Google Groups board, but the response has basically been "cap your maxmemory at a value that works well enough with your fragmentation -- it should level out." Except it doesn't level out. There's no knob or dial to control memory fragmentation cleanup in redis. It does perform some sort of cleanup, but the mechanism isn't exposed to operators. Tune that knob too low and you could still run the risk of running out of memory on a very busy cache server. Tune that value too aggressively and your cache will slow down because it's constantly defragmenting. That's especially the case in a single threaded program like redis. Is part of the problem our use case? Probably. We store web pages in redis and at high volume. Even with 100GB caches, the chance for fragmentation is higher with 1MB web pages than 2KB strings. Still, you could still exhaust all memory on the server writing 2KB strings if you wrote them fast enough. Even so, doesn't it seem wasteful to throw away 40GB of RAM to store 100GB of data? Because redis is single threaded and doesn't expose its defragmentation mechanism, you really shouldn't use it as a LRU cache. Just stick with memcached.

antirez

12/10/2013 01:52:29 am

Hello, please could you share what version of Redis you used and with what allocator? There was a problem that was fixed in recent versions. Basically Redis 2.6 introduced a new algorithm that prevented latency spikes, but had a problem resulting in some case in unbound memory usage. This was fixed in 2.8 and AFAIK back ported to 2.6, however I'll verify if I back ported it or not in a few. Thanks.

antirez

12/10/2013 01:58:56 am

Yes, it was back ported, first 2.6 version to feature the new algorithm is 2.6.15.

antirez

12/10/2013 02:59:11 am

Sorry can't reply to your reply (too nested?), since the problem is not the bug reported and fixed in 2.6.15, I'll read carefully your blog post to understand what problem you experienced exactly and to provide a fix ASAP if possible. More news soon. Comments are closed.

|

AuthorA NOLA native just trying to get by. I live in San Francisco and work as a digital plumber for the joint that runs this thing. (Square/Weebly) Thoughts are mine, not my company's. Archives

May 2021

Categories

All

|

RSS Feed

RSS Feed